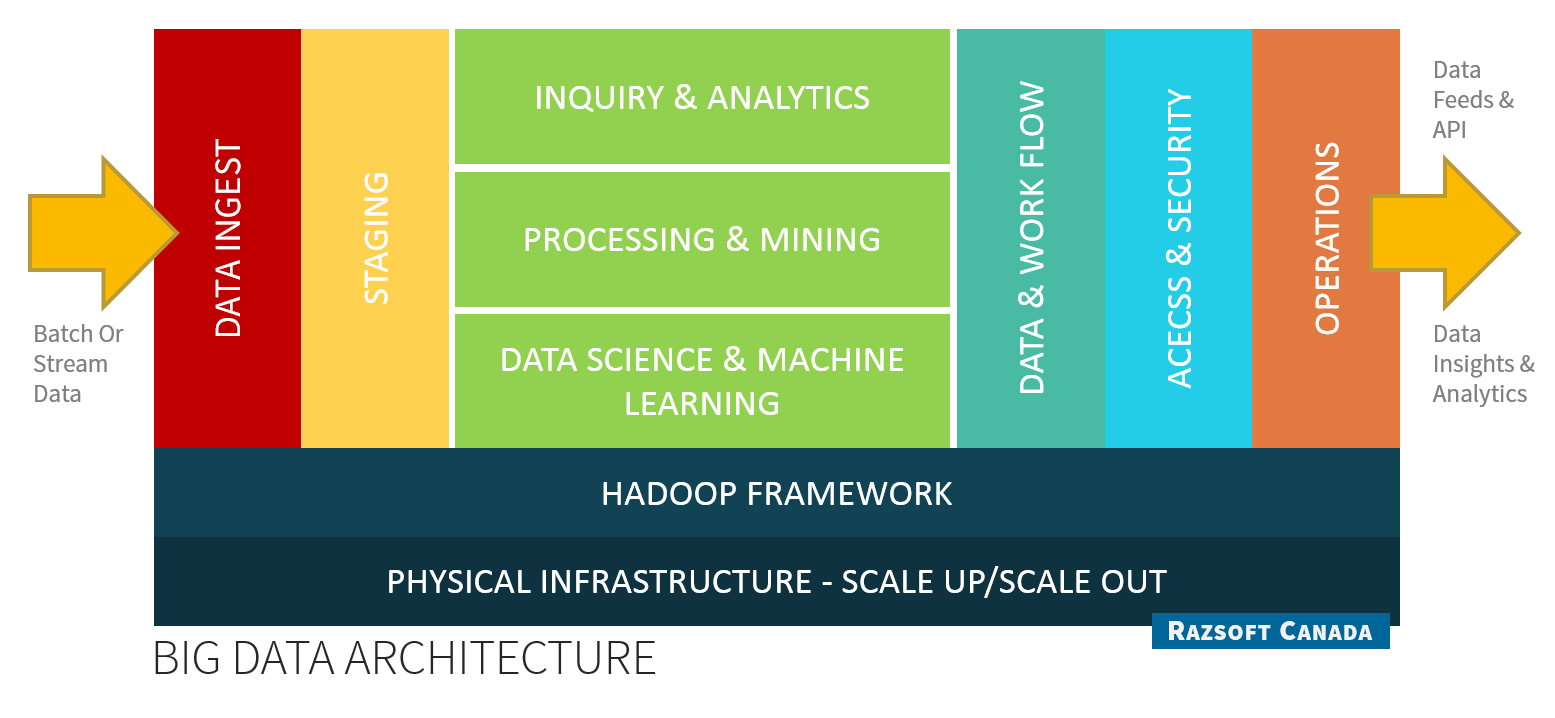

Big Data Architecture

Big Data architecture is an evolving practice for developing an automated data pipe line on a reliable and scalable platform at a lower cost, to gather and maintain large collections of data, and to process and apply advance statistical models for extracting useful data insights and information.

What is Big Data

Big Data is a popular term used to describe Tools, Technologies and Practices to process and analyze massive datasets that traditional data warehouse applications were unable to handle. Big Data Landscape including Technologies and Practices are rapidly evolving. Big data is often defined by five Vs, however, some of this data and related sciences exists long before. Hence, big data is a collection of data from traditional and digital sources, either inside or outside the enterprise that represents a source for ongoing discovery and analysis, that can be structured or un-structured.

Five V's

Big Data is often defined by five Vs:

- Volume: the amount of data, e.g. large data generated by machine sensors.

- Velocity: the speed of data generated and flowing into the enterprise, e.g. stream of data through social media, or telecom CDRs.

- Variety: the kind of data available, e.g. structured/unstructured, documents, multimedia etc.

- Veracity: the accuracy and quality of data

- Value: the economic value of the data

Hadoop Framework

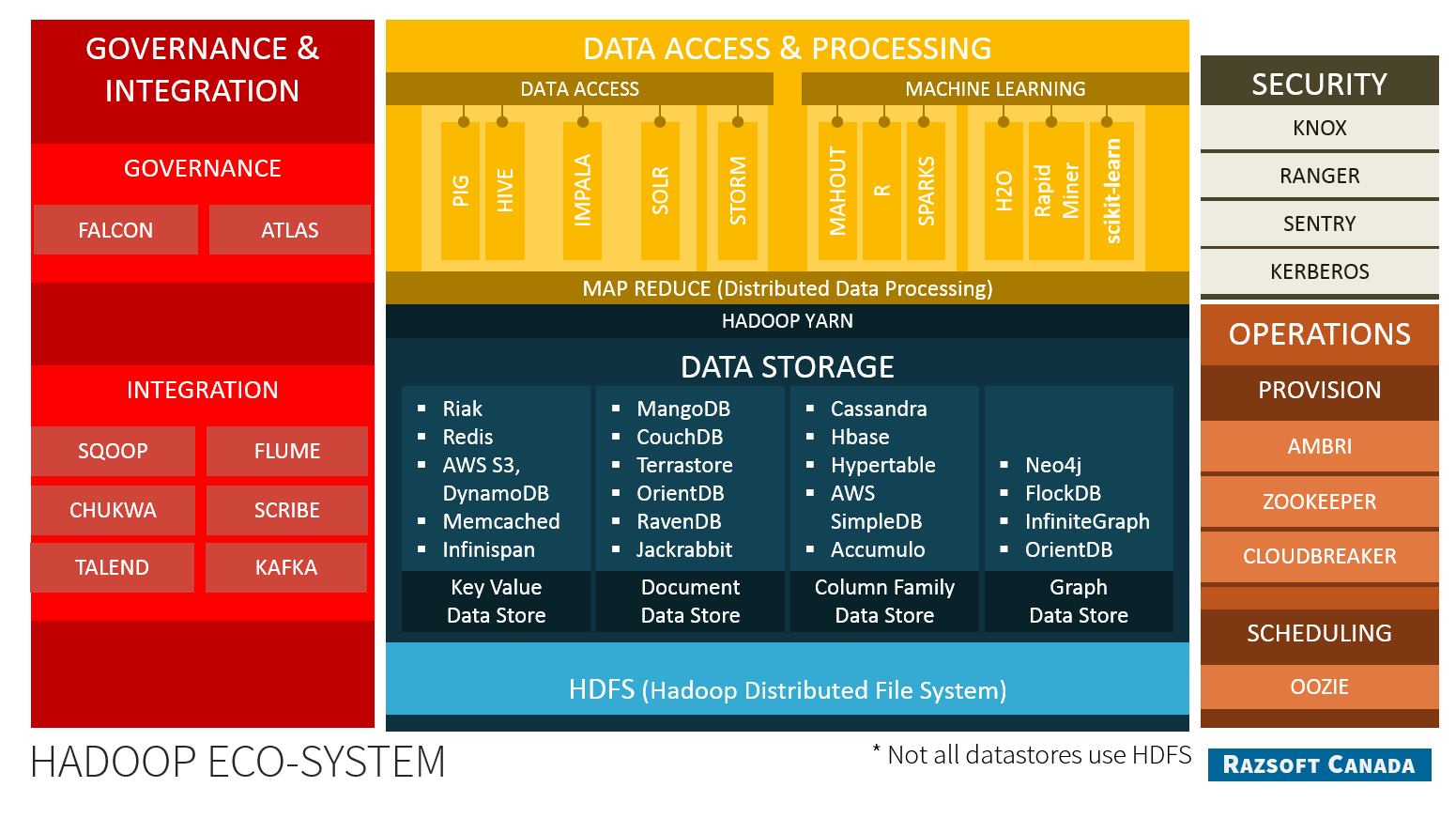

Hadoop is a framework and set of tools for processing very large data sets. It was designed to work on cluster of servers using commodity hardware, providing powerful parallel processing on compute and data nodes at a very low price. Techonology is rapidly advancing both for software and engineered hardware, making this framework even more powerful. Hadoop implements a computational paradigm known as MapReduce, which was inspired by an architecture developed by Google to implement its search technology, and its based on “map” and “reduce” function from LISP Programming.

Today large number of Tools & Technologies are available that staples to Hadoop and commonly termed as Hadoop or Big Data Eco-System.

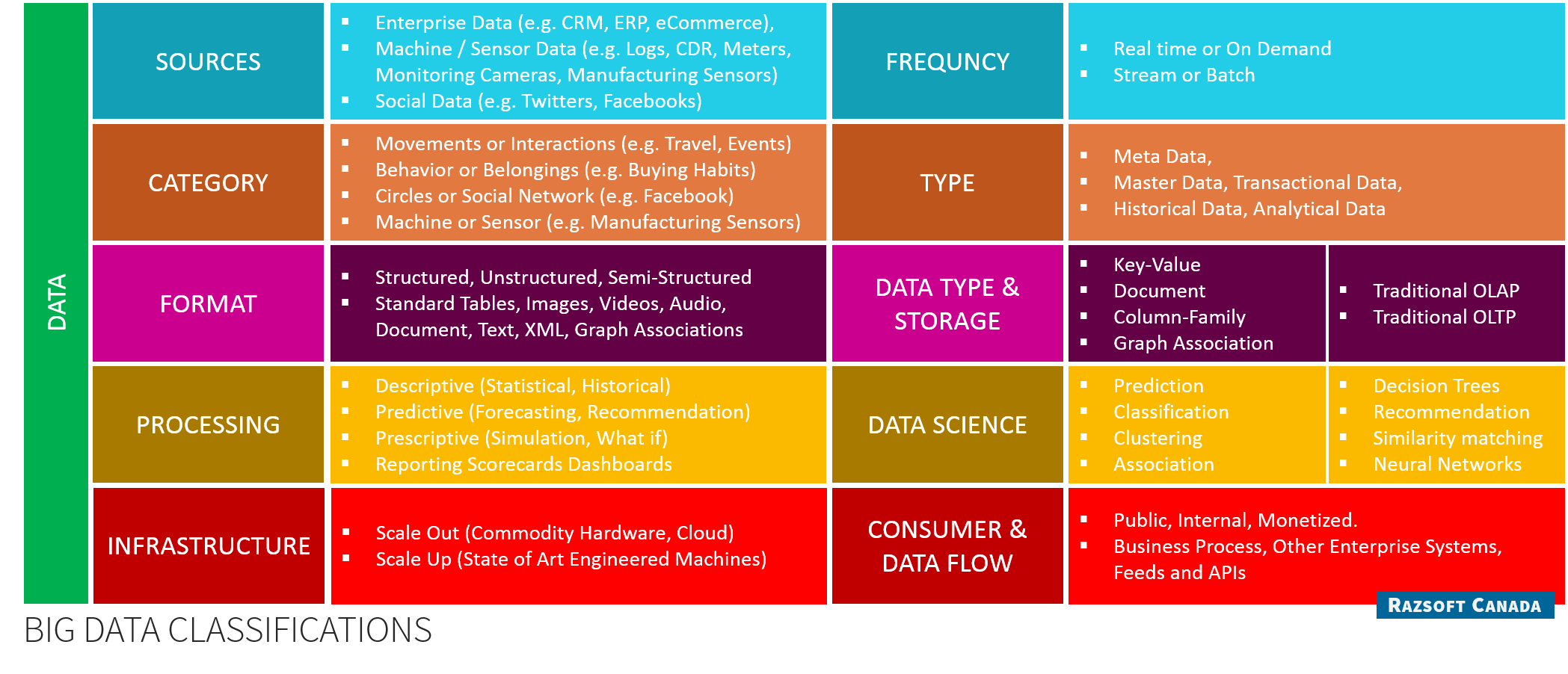

Classifications of Big Data

Categorizing business problems, and classifying nature and type of Big Data that needs to be sourced will helps understand the type of Big Data solution required to solve the equation. General characteristics of data deals with how to acquire the data, what is the structure and format of the data, how frequently data becomes available, What is the size of this data. What is the nature of processing required to transform this data, what algorithms or statistical model is required to mine the data etc.

Defining Business Problems

Classifying data based on variety of business problems in Industry, e.g.:

- Market Sentiment Analysis: Fusing social media feeds, customer issues, customer feedback and profiles to generate customer sentiments about products and services.

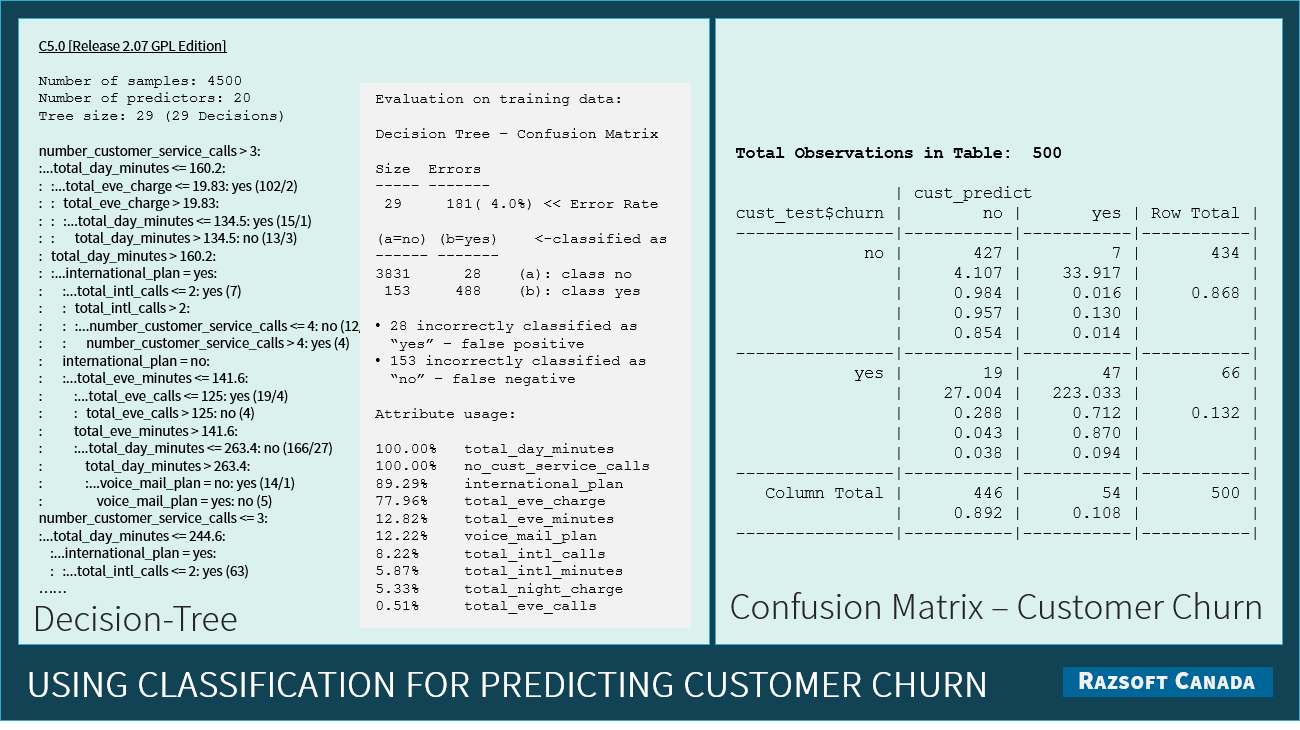

- Telecom Churn Analysis: Using Customer Profiles, Demographics, Segmentation, Transactions, CDRs, and fusing them with Social behavior, and applying predictive analysis and trending to forecast.

- Fraud Detection: Using Customer Profiles, Account Information, Transactions, Credit Data and Scoring, and fusing them real-time with Social behavior, and other data from partners to detect and stop fraud.

Data Acquisition

Today data is pumped through variety of sources including Data Sensors, mobile network, internet, traditional commercial and non-commerical data to discover new economic value in data. An enterprise is discovering how more information can be derived from an existing data, at the same time they are sourcing data from external players. Some of sources are:

- Enterprise data available in existing OTLP or OLAP Systems.

- Archieved data, not tapped. e.g. Log Data, machine data etc.

- Build partnerships with Key Industry Players to fuse data with industry like Telecom, Travel, Financial, and Entertainment etc.

- Integrate data sources with aligned and external businesses to derive 360-degree insights.

- Acquire Social Media Data or Internet Data

Big Data Management Life Cycle

Data Management in an Enterprise is a critical process for manage its data. Process involves defining, governing, cleansing, data quality, and securing all data in enterprise. it ensure the quality of data and that there is single source of truth. While Big Data is part of overall Data Management process in an Enterprise, its demands its own unique cycle due to its nature.

Big Data Management Life Cycle can be described in six key steps: i) Acquire ii) Classify & Organize iii) Store iv) Analyze v) Share & Act vi) Retire

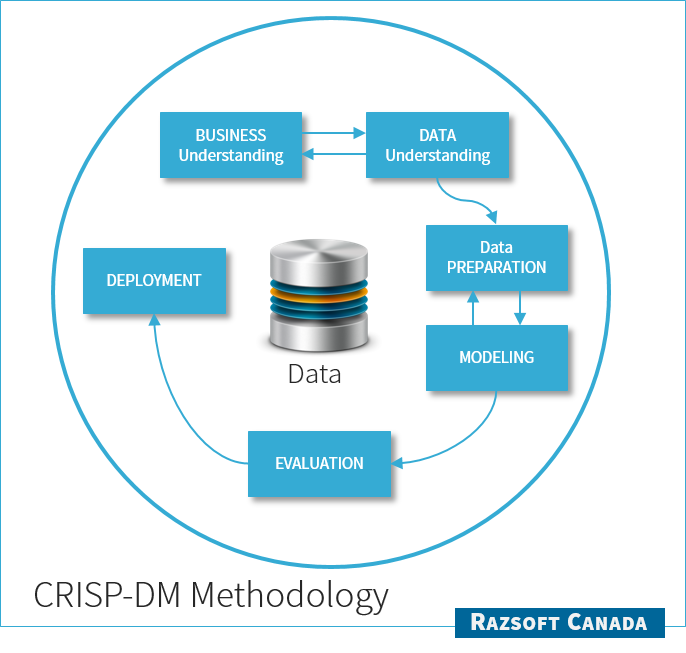

CRISP-DM Methodology

Stands for Cross Industry Process for Data Mining. CRISP-DM is a comprehensive data mining methodology and process model that provides a complete blueprint for conducting a data mining project. It is a robust and well-proven methodology. It breaks down Data Mining Projects into six steps:

- Business Understanding: Objectives, requirements, and problem definition

- Data Understanding: Initial data collection, familiarization, data quality issues; Initial, obvious results

- Data Preparation: Record and attribute selection; Data cleansing

- Modeling: Run the data mining tools

- Evaluation: Determine if results meet business objectives; Identify business issues that should have been addressed earlier

- Deployment: Put the resulting models into practice; Set up for continuous mining of the data

Big Data Infrastructure

While enterprises are rushing to process their unstructured data into actionable business intelligence, they are first required to created an Infrastructure with Compute and Storage architecture that can deal with petabytes of high velocity data.

Virtualization

Virtualization is fundamental to both Cloud Computing and Big Data. It provides high efficiency and scalability for Big Data Platform, and give MapReduce the desired distributed environment with endless scalability. Virtualization is desired across all IT Infra layers including Servers, Storage, Application, Data, Networks, Processors, and Memory etc to provide optimum scalability and performance in distributed environment.

Scale up / Scale out

Scaling is expanding an infrastructure (compute, storage, networking) to meet the growing needs of the applications that runs on it. Scaling up is replacing current technology with something more powerful. e.g. 1GbE switch with 10GbE switch.

Scale out means taking the current infrastructure, and replicates it to work in parallel in distributed environment. In Big Data environment, nodes are typically clustered to provide scalability. When more resources are required, additional nodes can be simply added into the cluster, adding more compute power or storage space. A virtualized or cloud environment can provide such an scalability (termed Elasticity) where additional nodes can be build on-the-fly when needed, and destroyed once the processing has finished.

Security

Security is of paramount concern in big data environment due to nature of data collected, privacy concerns, regulations and compliance. Careful Security policy is required to mitigate risks. Managing good Access-Control both internally and externally, and maintaining audit and logs for data access. In addition to access security, number of Data Safeguarding techniques can be applied to secure the data, e.g.

- Tokenization (e.g. replace credit card # with random token)

- Sanitization (either Encrypt or Remove data that can uniquely identify individual, e.g. name, national id)

- Data isolation (Isolation sensitive data into separate zone, e.g. PCI Zones)

Cloud

Cloud provides three key support for Big data -- Scalability, Elasticity and Flexibility. Resources can be added and remove resources in real-time as needed, in extremely quick time at lower cost, in comparison to traditional procurement of private infrastructure. Cloud Service options are vast and available at each layer, i.e. Infra-IAAS, Software-SAAS, Platform-PAAS, and Data-DAAS, and it can be structured as Private Cloud (private data center) and Public Cloud (external vendor service on pay per use basis). Examples of cloud services:

- Amazon Big Data - EC2, Elastic MapReduce, DynamoDB, S3 Storage, RedShift

- Google Big Data - Compute, Big Query, Prediction API

- Microsoft Azure - Based on Hortonworks HDP

Big Data Databases

Big Data consists of structured, unstructured and semi-structured data, and include relational databases (OLTP or OLAP), as well as other non-relational databases like key-value, document, columnar, graph or GeoSpatial data stores. A typical Big Data implementation will include multiple databases to serve different needs of Big Analytics -- a theme thats knows as Polyglot persistence.

Big Data Store is faced with many challenges. Firstly, it may receive fairly large data volumes at high velocity in real-time or near real-time. Secondly, its data sources may not be trustable, e.g. internet or social media. Third, it may receive dirty data which is inaccurate or inconsistent, hence cleansing and data quality may be astronomical task. Fourth, data may be noisy, and even in cleansed data only small portion of data may be of business value, Lastly, data may include personal or private information, subjected to regulatory and compliance laws, and privacy concerns. Hence, Data Governance, Data Privacy, and Data Life Cycle becomes very critical in Big Data environment.noSQL

noSQL Stands for NOT ONLY SQL. No formal definition. Its an umbrella term for unstructured data store, though they may support SQL-like query languages. First used in 1998 for open source relational database. Became popular in 2009, Johan Oskarsson organized event discussed distributed databases used the term noSQL. It provides a mechanism for storage and retrieval of data that is modeled in means other than the tabular relations used in relational databases.

Big Data Databases can be categorized as

- Relational: Traditional RDBMS. Oracle, SQL Server, PostgreSQL etc.

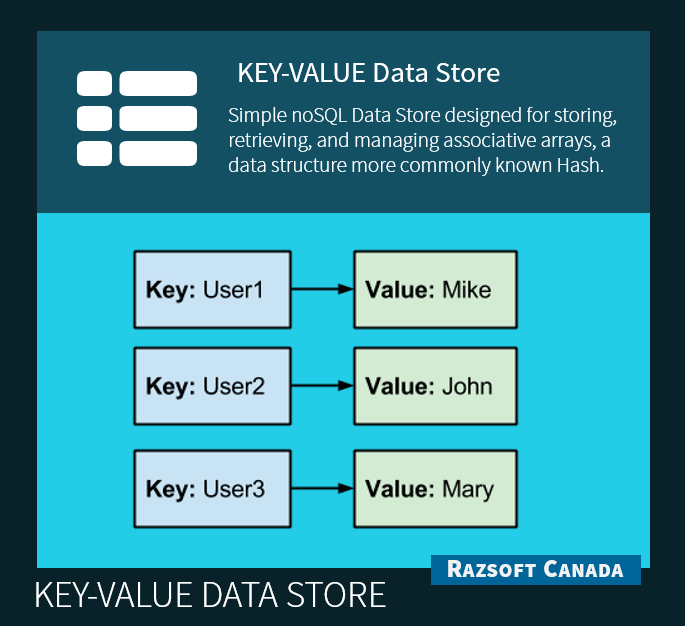

- Key-Value: Simple noSQL Data Store, support key-value. Riak, Redis, Amazon (S3, DynamoDB), Memcached, infiniSpan etc.

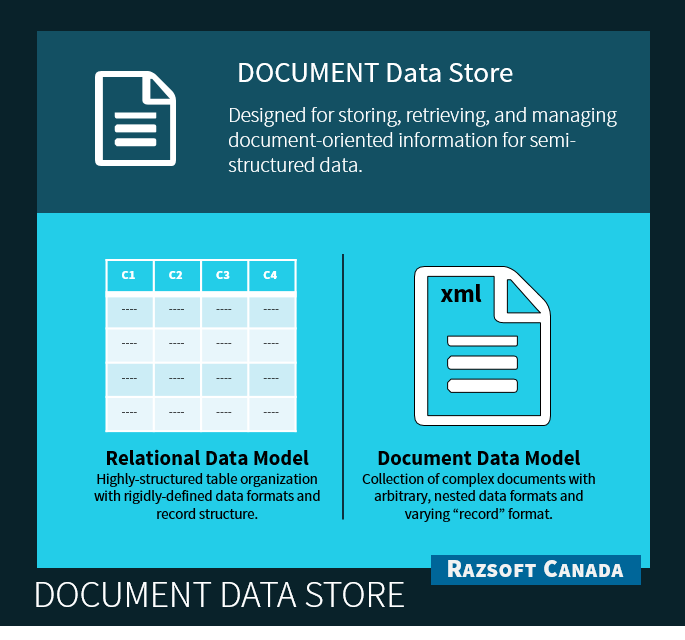

- Document: Semi-Structured, no pre-defined schema. MangoDB, CouchDB, Terrastore, OrientDB, RavenDB, Jackrabbit

- Columnar: Unstructed. Store Data in tabular format (in columns). Cassandra, Hbase (like Google BigTable), Hypertable, Amazon (SimpleDB) etc.

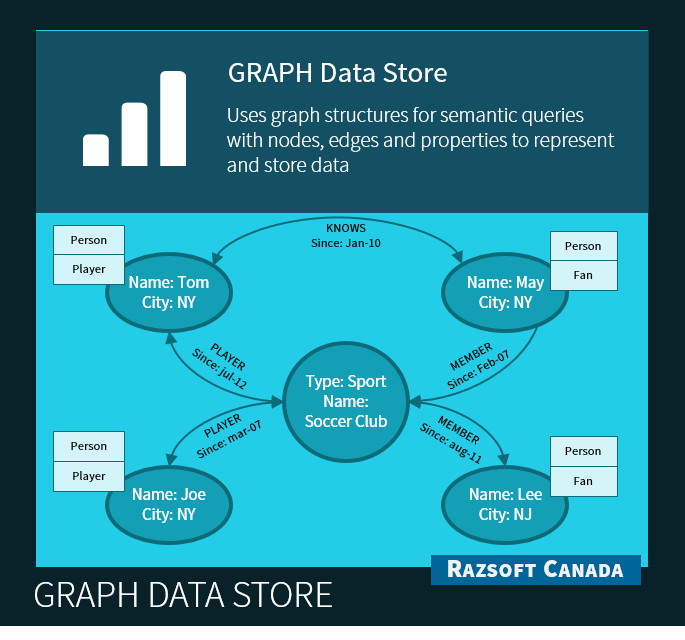

- Graph: Store entities and their relationship in Graph DS. Neo4j, FlockDB, InfiniteGraph, OrientDB etc.

- Spatial: Is a geo-database that is optimized to store and query data that represents objects defined in a geometric space. Can store 2D/3D Objects. Spatial data is Standardized by Open Geospatial Consortium (OGC), which establishes OpenGIS (Geographic Information System) and other Spatial Data Standards. Oracle Spatial, PostGIS etc.

Big difference between traditional RDBMS and noSQL Databases is BASE vs ACID.

- Traditional Relational Database supports ACID ( Atomicity, Consistency, Isolation, Durability).

- Most NoSQL stores lack true ACID Transactions, rather the support BASE (Basically Available, Soft state, Eventual consistency).

Polyglot Persistence term refers to use of several core database technologies in a single application process. Though organizations are already using multiple databases for various applications like OLTP, OLAP, CMS, data marts etc. It become much more prominent with Big Data, as nature of Big data is to deal with "variety" of data types (structured or unstructured), as well as varying needs to process/compute it (realtime, near realtime etc.)

For example, eCommerce applications requires shopping cart, product catalog, stock inventory, orders, payments, user profile, user sessions, recommendations etc. Instead of trying to store all different data types into one database, it is more suitable to store them in best suited environment and desired data persistence. E.g.

- User-session can be stored in Key-Value Data store.

- Shopping Cart and Product Catalog can store Document Data store.

- Recommendations can store in Graph Data Store.

- Orders can be stored in RDBMS.

- Analytics can be stored in Columar data store.

Big Data Analytics

Big Data Analytics is about gathering and maintaining large collections of data, and extracting useful data insights and information from these data collections.

Big Data Analytics not only provides tools and techniques to apply advance statistical models to data collections, but it is a completely different way of processing and extracting information, in comparison to traditional data analytics.

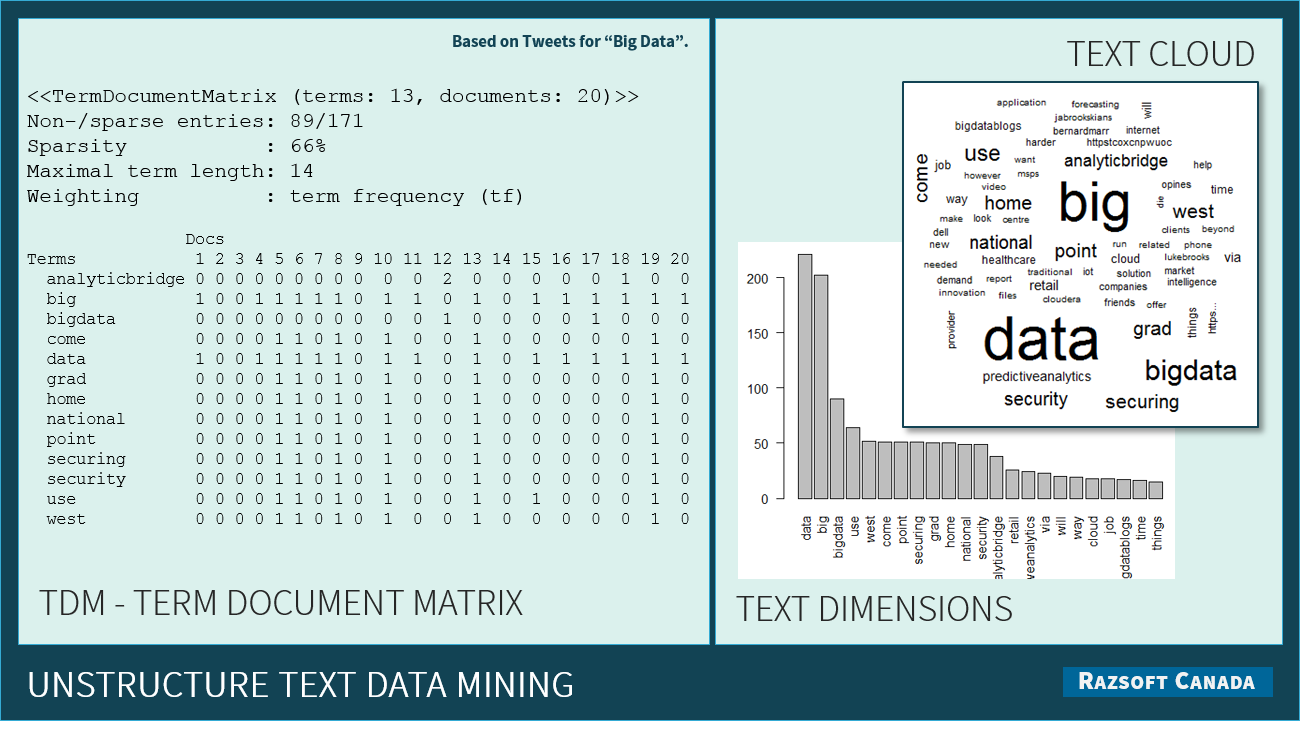

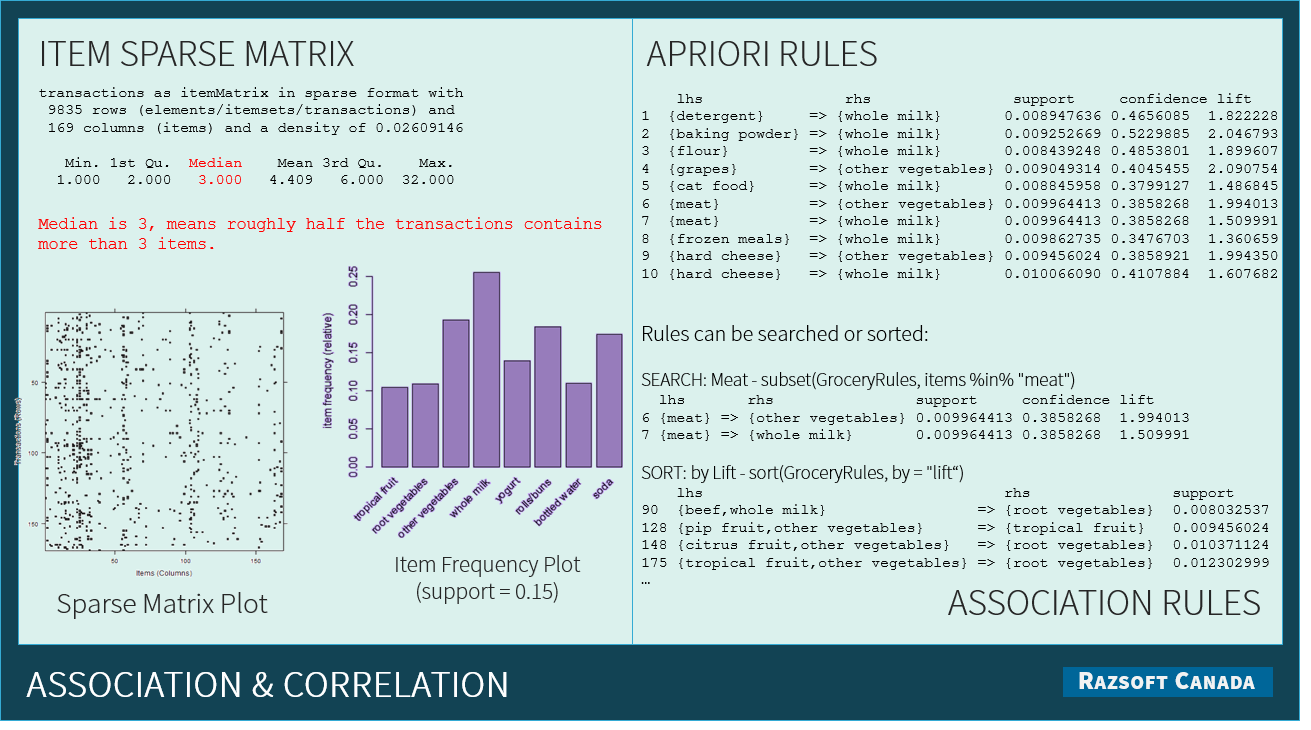

Big Data Analytics uses statistic modeling to provide deep insights into data using Data Mining techniques. Data Mining involves exploring and analyzing large datasets to find patterns in that data. These technique are used in Statistics and Artifical Intelligence (AI), and its all about Maths, like Linear Algebra, Calculus, Probability Theory, Graph Theory etc.

Data Mining is generally divided into four groups. i) Descriptive ii) Diagnostic iii) Predictive and iv) Prescriptive analysis. Descriptive Analytics classifies historical data to analyze performance, e.g. sales. Predictive Analytics attempts to predict future based on descriptive data, business rules, algorithms, and often human bias using Statistical Modeling techniques. Prescriptive analysis takes first two analysis and provide prescriptive advise based on what-if scenarios. It prescribe an action, so the business decision-maker can take this information and act upon it.

Analytics, Reporting & Dashboard

Building basic analytics, operational reporting and dashboards. This may involve developing descriptive statistics, breaking down data with various dimensions, building reports to monitor thresholds, KPIs. And reports to detect anomalies, fraud, and security breaches.

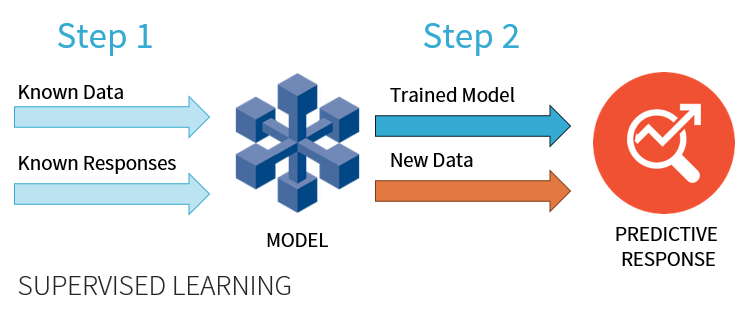

Data Mining & Data Science

Applying Data Science to mine data. It involves sophisticated complex algorithms, statistical models, machine learning, neural networks, text analytics. This includes descriptive, diagnostic, predictive or prescriptive statistics, applying supervised or unsupervised machine learning techniques. For instance, in Telecom company predicting customer behavior to manage Churn. some of the popular algorithms are classification, decision tree, regression, clustering, neural network etc.

Operationalizing Analytics

Key objective of data analytics is to put them into decision-maker's hand, so this information can be used and acted upon. For instance, a fraud analytics can be plugged into real-time Sales or Payment system to flag fraud transaction, or a recommendation engine can pull data from Big Data API to perform up-sell recommendations during sales process.

Monetizing Big Data

While enterprises are developing Data Science to gain a fully function 360 degree customer view to enable them to make informed decisions to improve Customer Experience & Satisfaction, Customer Engagements & Interactions, Customer Loyalty & Recommendations, and Channels/Employee Productivity etc. Or to predict Customer behavior, Customer Buying Patterns, Customer Movements and Travel Plans, Churn, Cross Sell / Up Sell Opportunities. Or to detect Fraud & Spin, Security alerts etc. They can use this data to find new revenue streams, develop new business models, or simply sell it to other companies who needs this analytics to perform their business.

However, enterprises needs to ensure that they exploit data by ensuring customer privacy and the security of data under different legal environments.

Internal

Exploit internally to generate Leads to support Campaigns, support Marketing & Sales Team to perform data inquest and analysis to find new revenue streams, reduce consumer churn, improve customer loyalty, or develop profitable data-driven business models.

External

Develop Data Insights for consumption by Industry Verticals via Data Stream and API.